Are you looking to Enroll in course?

Are you ready to take your skills to the next level? Enroll in our course today and unlock your full potential

Live Classes: Upskill your knowledge Now!

Chat NowPublished - Tue, 06 Dec 2022

A list of frequently asked SEO interview questions and answers is given below.

SEO stands for Search Engine Optimization. It is a process of increasing the number of visitors to a website. It enhances the visibility of a web page and increases the quantity and quality of traffic to a website so that it could appear at the top of search engine result pages.

It optimizes the websites for search engines and thus helps it achieve higher ranking in search engine result pages when users use keywords related to their products or services. Therefore, it improves the quality as well as the quantity of traffic to a website through organic search engine results. Some of the basic activities involved in an SEO process are as follows:

They are called SEO executives, Webmasters, Websites optimizers, Digital marketing experts, etc.

SEO executive: He is responsible for increasing the number of visitors or traffic to a website using various SEO tools and strategies.

SEO/SMO analyst: He is responsible for planning and implementing SEO and social media strategies for the clients. He is supposed to quickly understand and support initiatives to achieve the objectives and goals of the client campaigns.

Webmaster: He is responsible for maintaining one or more websites. He ensures that the web servers, software, and hardware are working smoothly.

Digital Marketing Expert: He is responsible for planning and executing digital marketing programs to promote the brand or increase the sales of the products and services of the clients.

Google webmaster tools, Google Analytics, Open site explorer, Alexa, Website grader are some of the free and basic tools which are commonly used for SEO. However, there are also many paid tools like Seo Moz, spydermate, bulkdachecker, readily available in the market.

Google webmaster tools: It is one of the most useful SEO tools. It is designed for the webmasters. It helps webmasters communicate with Google and evaluate and maintain their website's performance in search engine results. Using webmaster, one can identify the issues related to a website such as crawling errors, malware issues, etc. It is a free service offered by Google, anyone who has a site can use it.

Google analytics: It is a free Web analytics service offered by Google. It is designed to provide analytical and statistical tools for SEO and digital marketing. It enables you to analyze website traffic and other activities that take place on your website. Anyone who has a Google account can use this service.

Open site explorer: It is a Mozscape index-powered workhorse which is designed to research backlinks, find link-building opportunities and find out links that may impact rank badly.

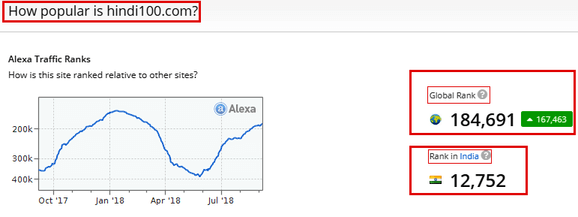

Alexa: It is a global ranking system that compiles a list of most popular websites based on the web traffic data and accordingly provides Alexa Rank to a website. The lower the Alexa rank, the more popular a website, e.g., a site with rank 150 will have more visitors than a site with rank 160.

Website grader: It is a free online tool designed to grade a website against some critical metrics like performance, SEO, security and mobile readiness.

Some Paid SEO Tools:

SEOMoz: It is a premium SEO web application designed for SEO. It provides analytics and insights to improve your search engine rankings. It is a collection of various SEO tools that cover all vital areas of SEO.

Spyder Mate: This software allows you to improve the ranking of your website as well as offers various methods to promote a website. It enables you to manage your web portal to attract more and more traffic to your site.

Bulkdachecker: It is used to check the Domain Authority of Multiple Websites simultaneously.

On page SEO: It means to optimize your website and make some changes in the title, meta tags, structure, robots.txt, etc. It involves optimizing individual web pages and thus improves the ranking and attracts more relevant traffic to your site. It this type of SEO, one can optimize both the content and the HTML source code of a page.

Main aspects of On page SEO are:

Off page SEO: It means optimizing your websites through backlinks, social media promotion, blog submission, press releases submission, etc.

Main aspects of Off page SEO are:

In On Page SEO, optimization is done on the website that involves making changes in the title tag, meta tags, site structure, site content, solving canonicalization problem, managing robots.txt, etc. Whereas, in off page SEO, the primary focus is on building backlinks and social media promotion.

On page SEO techniques:

It mainly involves optimizing the page elements such as:

Off page SEO techniques:

It mainly focuses on the following methods:

There are several Off page SEO techniques such as:

It is an American multinational company which specializes in Internet-based products and services. Its services include a search engine, online advertising, cloud computing, software and more.

It is co-founded in 1998 by Larry Page, an American computer scientist and internet entrepreneur, and Sergey Brin who is also an American computer scientist and internet entrepreneur.

A search engine is a web-based software program that is developed to search and find information on the World Wide Web. It enables internet users to search the information via the World Wide Web (WWW). The user is required to enter keywords or phrases into the search engines and then search engine searches websites, web pages or documents containing the similar keywords and presents a list of web pages with same keywords in the search engine result pages.

We can say that it generally answers the queries entered by the users in the form of a list of search results. So, it is a web-based tool that enables us to find information on the World Wide Web. Some of the popular search engines are Google, Bing, and Yahoo.

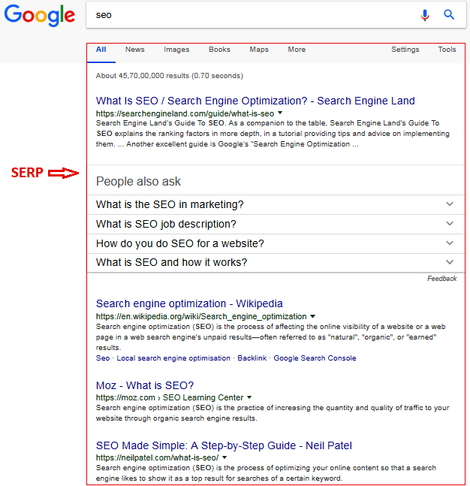

A search engine result page is the list of results for a user's search query and is displayed by the search engine. It is displayed in a browser window when the users enter their search queries in the search field on a search engine page.

The list of results generally contains a list of links to web pages that are ranked from the most popular to the least popular. Furthermore, the links also contain the title and a short description of their web pages. Furthermore, SERP not only provides the list of search results, but it may also include advertisements.

To understand, how a search engine works, we can divide the work of search engines into three different stages: crawling, indexing, and retrieval.

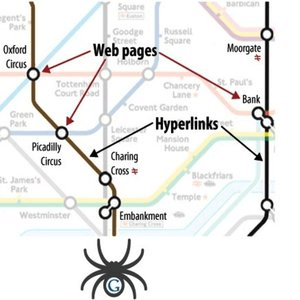

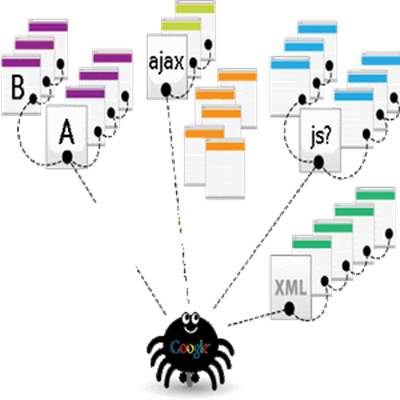

Crawling: It is performed by software robots called web spiders or web crawlers. Each search engine has its web spiders to perform crawling. In this step, the spiders visit websites or web pages and read them and follow the links to other web pages of the site. Thus by crawling, they can find out what is published on the World Wide Web. Once the crawler visits a page, it makes a copy of that page and adds its URL to the index.

The web spider generally starts crawling with heavily used servers and popular web pages. It follows the route determined by the link structure and finds new interconnected documents through new links. It also revisits the previous sites to check for the changes or updates in the web pages. If changes are found, it makes a copy of the changes to update the index.

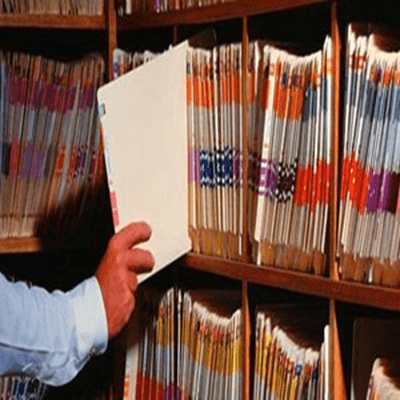

Indexing: It involves building an index after crawling all websites or web pages found on the World Wide Web. An index of the crawled sites is made based on the type and quality of information provided by them and stored in huge storage facilities. It is like a book that contains a copy of each webpage crawled by the spider. Thus, it collects and organizes the information from all over the internet.

Retrieval: In this step, the search engine responds to the search queries made by the users by providing a list of websites with relevant answers or information in a particular order. It keeps the relevant websites, which offer unique and original information, on the top of the search engine result pages. So, whenever, a user performs an online search, the search engine searches its database for the websites or web pages with relevant information and make a list of these sites based on their relevancy and present this list to the users on the search engine result pages.

The anchor tag is used to make click-able text written on a hyperlink, i.e., it is a clickable text in a hyperlink. It enhances the user experience as it takes the users directly to a specific area of a webpage. They are not required to scroll down the information to find a particular section. So, it is a way to improve navigation.

It also enables webmasters to keep things in order as there is no need of creating different web pages or splitting up a document. Google also sends users to a specific part of your page using this tag. You can attach anchor tag to a word or a phrase. It takes the reader down to a different section of the page instead of another page. When you use this tag, you create a unique URL on the same page.

The content presented in a flash website is hard to parse by search engines, so, it is always preferred to build a website in HTML for better SEO prospective.

Page title, H1, Body text or content, Meta title, Meta description, Anchor links and Image Alt Tags are some of the most important areas where we can include our keywords for the SEO purpose.

A webmaster is a free service by Google which provides free Indexing data, backlinks information, crawl errors, search queries, CTR, website malware errors and submits the XML sitemap. It is a collection of SEO tools that allow you to control your site in Google as well as allows Google to communicate with webmasters. For example, if anything goes wrong like crawling mistakes, plenty of 404 pages, manual penalties, and malware identified, Google will speak to you through this tool. If you use GWT, you don't need to use some other costlier tools. So, it is a free toolset that helps you understand what is going on with your site by providing useful information about your site.

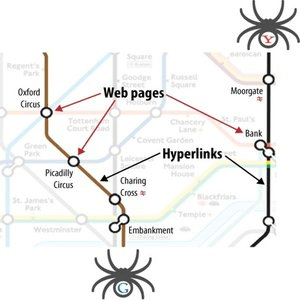

Many search engines use programs called spiders to index websites. The spiders are also known as crawlers or robots. They act as automatic date searching tools that visit every site to find new or updated web pages and links. This process is called web crawling. Spiders follow hyperlinks and gather textual and meta information for the search engine databases. They collect as much information as they can before relaying to the server of the search engine.

Spiders may also rate the content being indexed to help the search engine determine relevancy levels to a search. They are called spiders as they visit many sites simultaneously, i.e. their legs keep spanning a large area of the web. All search engines use spiders to revise and build their indexes.

Meta tags are the HTML tags that are used to provide information about the content of a webpage. They are the basic elements of SEO. Meta tags are incorporated in the "head" section of HTML, e.g. Meta tags are placed here.

The Meta tags are of three types. Each tag provides specific information about the content of the page. For example:

A title tag is an HTML element that is used to specify the title of a page. It is displayed on search engine result pages as a clickable heading just above the URL and at the top of the browser.

In SEO, the title tag is significant. It is highly recommended to include a unique and relevant title that correctly describes the content of a webpage or a website. Thus, it tells the users and search engines about the nature or type of information contained in your webpage.

An ideal title should be between 50 and 60 characters long. You can also place your primary keywords at the start of your title and put the least important keywords at the end. It is the first thing that a search engine analyzes before ranking your webpage.

No, Google does not use the keyword meta tags in web search rankings. It is believed that Google ignores the meta tags keywords due to their misuse.

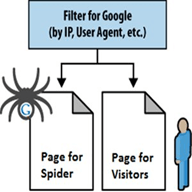

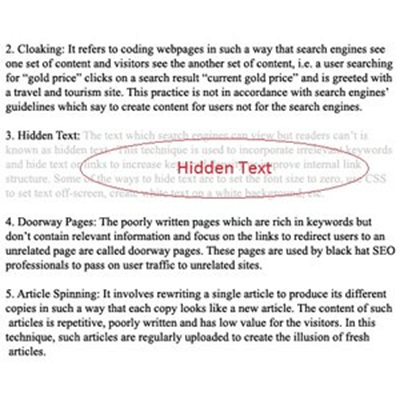

Cloaking is a black hat SEO technique that enables you to create two different pages. One page is designed for the users, and the other is created for the search engine crawlers. It is used to present different information to the user than what is presented to the crawlers. Cloaking is against the guidelines of Google, as it provides users with different information than they expected. So, it should not be used to improve the SEO of your site.

Some Examples of Cloaking:

HTML is a case-insensitive language because uppercase or lowercase does not matter in this language and you can write your code in any case. However, HTML coding is generally written in lower case.

SEO: It is a process of increasing the online visibility, organic (free) traffic or visitors to a website. It is all about optimizing your website to achieve higher rankings in the search result pages. It is a part of SEM, and it gives you only the organic traffic.

Two types:

SEM: It stands for search engine marketing. It involves purchasing space on the search engine result page. It goes beyond SEO and involves various methods that can get you more traffic like PPC advertising. It is a part of your overall internet marketing strategy. The traffic generated through SEM is considered the most important as it is targeted.

SEM includes both SEO and paid search. It generally makes use of paid searches such as pay per click (PPC) listings and advertisements. Search ad networks generally follow pay-per-click (PPC) payment structure. It means you only pay when a visitor clicks on your advertisement.

The title tag should be between 66-70 characters as the Google generally displays the first 50to 60 characters of the title tag. So, if your title is under 60 characters, there are more chances that your title is displayed properly. Similarly, the Meta description tag should be between 160-170 characters as the search engines tend to truncate descriptions longer than 160 characters.

We should follow the following instructions to decrease the loading time of a website:

Webmaster tool should be preferred over Analytics tool because it includes almost all essential tools as well as some analytics data for the search engine optimization. But now due to the inclusion of webmaster data in Analytics, we would like to have access to Analytics.

A spider is a program used by search engines to index websites. It is also called a crawler or search engine robot. The main usage of search engine spiders is to index sites. It visits the websites and read their pages and creates entries for the search engine index. They act as data searching tools that visit websites to find new or updated content or pages and links.

Spiders do not monitor sites for unethical activities. They are called spiders as they visit many sites simultaneously, i.e., they keep spanning a large area of the web. All search engines use spiders to build and update their indexes.

If your website is banned by the search engines for using black hat practices and you have corrected the wrongdoings, you can apply for reinclusion. So, it is a process in which you ask the search engine to re-index your site that was penalized for using black hat SEO techniques. Google, Yahoo, and other search engines offer tools where webmasters can submit their site for reinclusion.

Robots.txt is a text file that gives instructions to the search engine crawlers about the indexing of a webpage, domain, directory or a file of a website. It is generally used to tell spiders about the pages that you don't want to be crawled. It is not mandatory for search engines, yet search engine spiders follow the instructions of the robots.txt.

The location of this file is very significant. It must be located in the main directory otherwise the spiders will not be able to find it as they do not search the whole site for a file named robots.txt. They only check the main directory for these files, and if they don't find the files in the main directory, they assume that the site does not have any robots.txt file and index the whole site.

Keyword proximity refers to the distance between the keywords, i.e., it tells how close keywords are to each other in a phrase or body of text. It is used to measure the distance between two keywords in the text. It is used by some search engines to measure the relevancy of a given page to the search request. It specifies that the closer the two keywords in a phrase or a search term, the more relevant will be the phrase. For example, see the keywords "Delhi Digital Photographer" in the search term "Delhi Photographer Ram Kumar specialized in digital photography." The proximity between Delhi and Photographer is excellent, but between the "Photographer" and "digital" proximity is not good as there are four words between them. So, a search term's keywords should be as close to each other as possible.

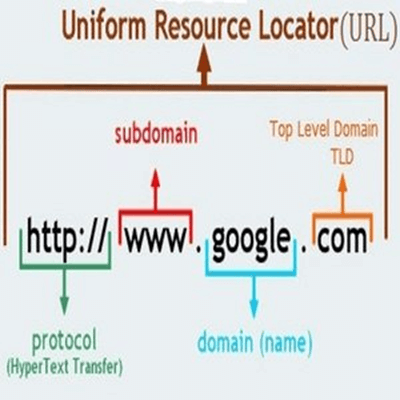

URL stands for Uniform Resource Locator (URL). It is the web address of an online resource like a website, webpage or a document on the internet. It tells the location and name of the resource as well as the protocol used to access it, i.e., it locates an existing resource on the internet. A URL may contain as many as six parts, and cannot have less than two parts. For example, http://www.example.com, in this URL we have two parts: a protocol (http) and a domain (www.example.com).

A URL for HTTP or HTTPS generally comprises three or four components, such as:

We use hyphens to separates the words in a URL. See the image:

A domain name is the name of your website. It identifies one or more IP addresses, e.g., the IP address of domain name "google.com" is "74.125.127.147". Domain names are developed as it is easy to remember a name rather than a long string of numbers.

A domain is displayed on the address bar of the web browser and may consist of any combination of letters and numbers and can be used with various domain name extensions such as .com, .net and more. Domain name is always unique, i.e., no two websites can have the same domain name.

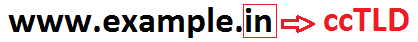

A TLD is the last part of an Internet address. For example, in xyz.com the TLD is .com.

A ccTLD is a country code top-level domain extension that is assigned to a country. It is based on the ISO 3166-1 alpha-2 country codes, which means it can have only two characters, e.g., .us for the United States, .au for Australia, .in for India. So these domain extensions are reserved for countries. See the image given below:

An internal link is a URL link placed on your website that points to your another webpage. It is different from an external link which leads to another website. Internal links are very useful regarding SEO as they:

The backlinks are an indication of the popularity of a website. They are important for SEO as most of the search engines like Google give more value to websites that have a large number of quality backlinks, i.e., a site with more backlinks is considered more relevant than a site with fewer backlinks.

The backlinks should be relevant which means they should come from the sites that have content related to your site or pages otherwise the links if they are coming from sites that have different content, will be treated as irrelevant backlinks by the Search Engine. For example, there is a website about how to rescue orphaned dogs, and it receives a backlink from a website about dog care essentials then this would be a relevant backlink in a search engine assessment than a like from site about automobiles.

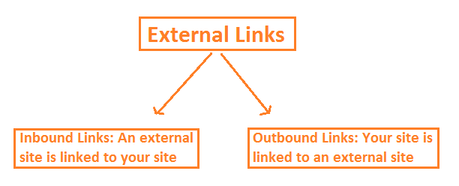

An inbound link, which is also known as a backlink, is the incoming link to your site from an external source. It comes from an external site to your site. Whereas, outbound link is a link that starts from your site and points to another website. For example, if xyz.com links to your domain, this link from xyz.com is an inbound or backlink for your domain. However, it is an outbound link for xyz.com. See the image given below:

Link popularity refers to the number of backlinks that point towards a website. The backlinks can be of two types: internal and external links. The links to a website from its pages are called internal links and the links from outside sources or other websites are called external links.

The high link popularity indicates that more people are connected to your site, and it has relevant information. Most of the search engines use link popularity in its algorithm while ranking websites in the SERPs. For example, if two websites have the same level of SEO, the site with higher link popularity will be higher than another site by the search engine.

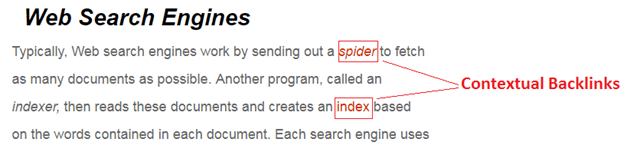

Contextual backlinks are the links to external websites that are placed within the content of a web page, i.e., they are part of the content of a page. They are generally built from high authority web pages. These links can be used to build your keyword ranking higher in Search Engines and to increase the domain trust online. See the image:

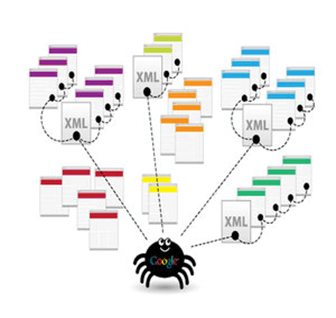

Crawling refers to an automated process that enables search engines to visit web pages and gather URLs for indexing. When the crawler visits a page, it makes a copy of that page and adds its URL to the index which is called indexing. The search engines have software robots, which are known as web spiders or web crawlers, for crawling.

The crawler not only reads the pages but also follows the internal and external links. Thus, they can find out what is published on the World Wide Web. The crawlers also revisit the previous sites to check for the changes or updates in their web pages. If changes are found, it updates the index accordingly.

Indexing starts after crawling. It is a process of building an index by adding URLs of web pages crawled by the crawlers. An index is a place where all the data that has been crawled is stored, i.e., it is like a huge book with a copy of each page that is crawled. Whenever users enter search queries, it is the index that provides the results for search queries within seconds. Without an index, it won't be possible for a search engine to render search results so fast.

So, the index is made of URLs of different web pages visited by the crawler. The information contained in the web pages is provided by the search engines to users for their queries. If a page is not added to the index, it can't be viewed by the users.

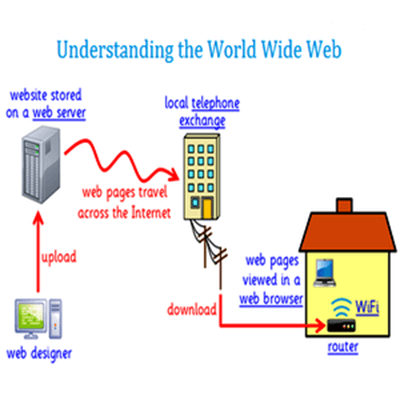

The World Wide Web is a like a huge book whose pages are located on multiple servers present throughout the world. These web pages contain information and are connected by links called "hypertext." In a book, we move from one page to another in a sequence, but in World Wide Web, we have to follow hypertext links to visit the desired page.

So, it is a network of internet servers containing information in the form of web pages, audios, videos, etc. These web pages are formatted in HTML and accessed via HTTP.

World Wide Web was designed by Tim Berners-Lee in 1991. It is different from the internet which is a network connection used to access the World Wide Web.

A website is a collection of interlinked web pages or formatted documents that share a single domain and can be accessed over the internet. It may contain only one page or tens of thousands of pages and can be created by an individual, group, or an organization, etc. It is identified by a domain name or web address. For example, when you type the web address over the internet, you will arrive at the home page of that website.

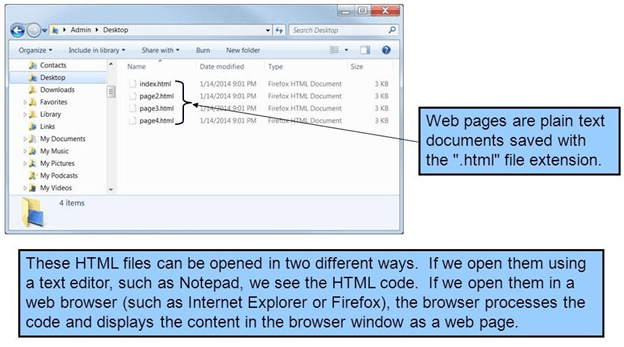

A web page is what you see on the screen of a computer or mobile when you type a web address or click on a link or enter a query in a search engine like Google, Bing, etc. A web page typically contains information that may include text, graphics, images, animation, sound, video, etc. See the image given below:

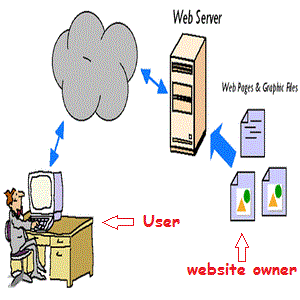

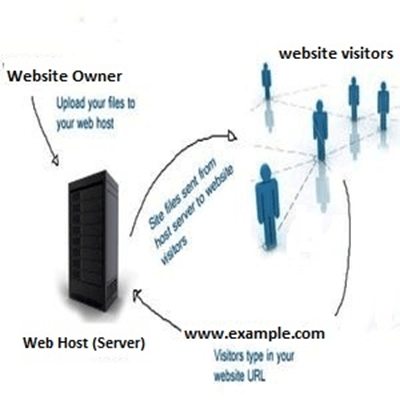

It is a computer program that is designed to serve or deliver the web pages to users in response to their queries or requests made by their computers or HTTP clients. In other words, it hosts websites on the internet. It uses HTTP (Hypertext Transfer Protocol) to serve pages to computers that are connected to it. See the image given below for more clarification!

A service that provides space for websites or web pages on special computers called servers. A web hosting enables sites or web pages to be viewed on the internet by the internet users. When users type the website address (domain name) into their browser, their computers connect to the server, and your web pages are delivered to them through a browser.

The websites are stored and hosted on special computers called servers. So, if you want users to see your website, you are required to publish it with a web hosting service. It is generally measured in terms of disc space you are allotted on the server and the bandwidth you require for accessing the server. While choosing a web hosting services one should evaluate the reliability and customer service of the service provider.

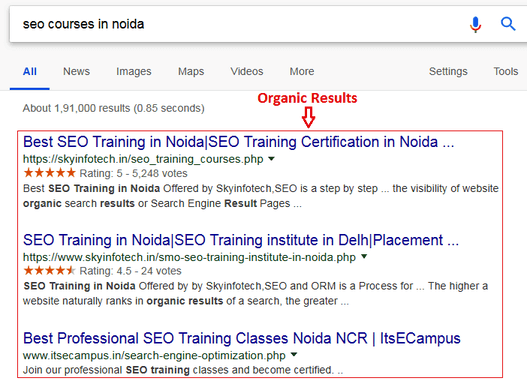

Organic results refer to the listings of web pages on the SERPs that appear because of organic SEO such as relevance to the search term or keywords and free white hat SEO techniques. They are also known as free or natural results. The search engine marketing (SEM) is not involved in producing organic results. So, organic results are the natural way of getting top ranking in SERP. The main purpose of SEO is to get a higher ranking for a web page in organic results of the search engines.

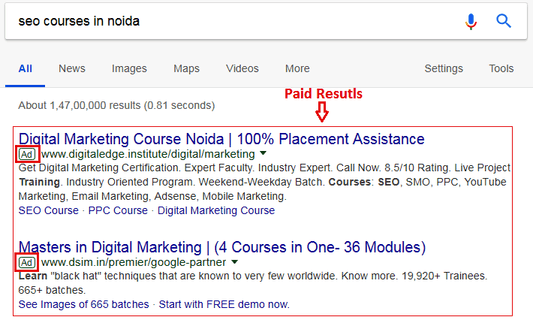

Paid search results are the sponsored ads or links that appear on the SERPs. They are part of Search Engine Marketing in which you have to pay to place your websites or ads on the top of the result pages. The website owners who have a good budget and want results quickly generally pay to Google to display their websites on the top of the result pages for the specific search terms or keywords.

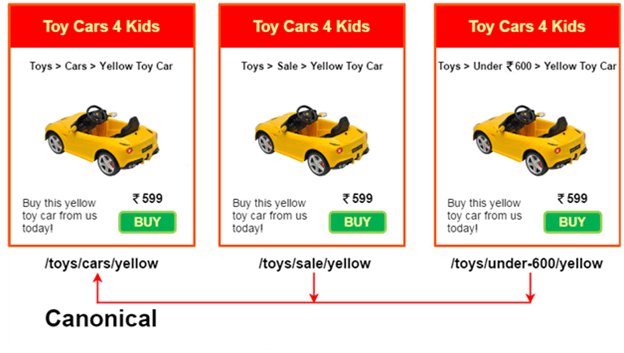

Canonical URL, which is also known as a canonical tag, is an HTML element that is used to prevent duplicate content issues. This tag is used when multiple versions of the same page are available over the internet.

It enables you to select the best URL as a canonical URL out of the several URLs of the multiple copies of a webpage. When you mark one version or copy as canonical version, the other versions are considered the variations of the canonical or original version by the search engine. Thus, it is used to resolve content duplication issues.

In this image, we have marked the URL of the page "www.example.com/toys/cars/yellow "as canonical URL. So, Google will consider it the original page and the other two pages as its variations, not the duplicates.

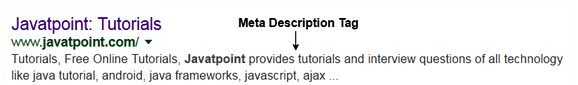

Meta descriptions, which are also known as HTML attributes, provide brief information about the content of a web page. They act as preview snippets of the web pages and appear under the URL of your page in SERPs.

A relevant and compelling meta description brings users from search engine result pages to your website, and thus it also improves the click-through rate (CTR) for your webpage.

Google has many factors in its algorithm to rank the websites in SERPs. Using its algorithm, it finds the relevant results for the users' queries.

Google keeps updating its ranking factors to give users the best experience and to put a check on black hat SEO techniques. So, Google displays results on the basis of ranking factors such as content, backlinks and mobile optimization.

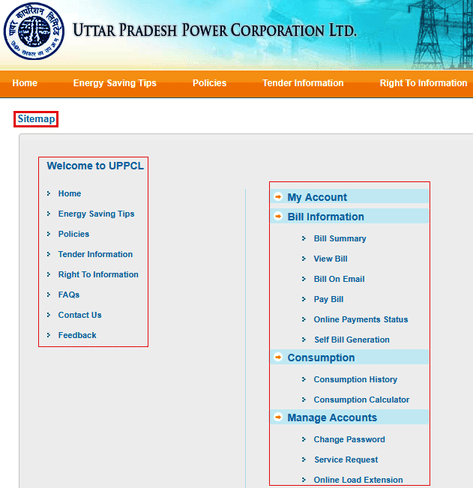

A sitemap refers to the map of a website. It is the detailed structure of a site that includes different sections of your site with internal links.

An HTML sitemap is an HTML page that contains all the links of all the web pages of a website, i.e., it contains all formatted text files and linking tags of a website. It outlines the first and second level structure of a site so that users can easily find information on the site.

Thus, it improves the navigation of websites with multiple web pages by listing all the web pages in one place in a user-friendly manner. The HTML sitemaps provide a strong foundation for all the web pages of a website and are primarily concerned with users.

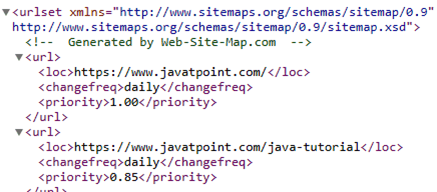

An XML sitemap is exclusively designed for the search engines. It facilitates the functionality of the search engines as it informs the search engines about the number of web pages, the frequency of their updates including the recent updates. This information helps search engine robots in indexing the website. See the image of the sitemap of a website:

301 redirecting is a method of redirecting users and search engines from the old page URL to a new page URL. The 301 redirect is used to pass the link traffic from the old URL to the new URL. It is a permanent redirect from one URL to another site without typing the URL of the new site. It helps you maintain the domain authority and search rankings when the URL of a site is changed for any reason.

Furthermore, it allows you to associate common web conventions with one URL to improve the domain authority; to rename or rebrand a site with a new URL; to direct traffic to a new website from other URLs of the same organization. So, you must set up a 301 redirect before moving to a new domain.

A 404 error is an HTTP response status code which indicates that the requested page could not be found on the server. This error is generally displayed in the internet browser window just like web pages.

HTTP 404 error is technically a client-side error which means it is your mistake, i.e., the requested page is not present in your website. If you had maintained that page in your site, it would have been indexed by the crawler and thus would have been present in the server. Furthermore, you also receive this error, when you mistype a URL or when a webpage or resource is moved without redirecting the old URL to the new one. So, whenever you move your webpage redirect the old URL to the new URL to avoid this error as it may affect the SEO of your site.

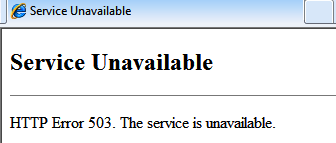

The "503 Service Unavailable" error is an HTTP status code which indicates the server is not available right now to handle the request. It often occurs when the server is too busy or when maintenance is performed on it. Generally, it is a temporary state which is resolved shortly.

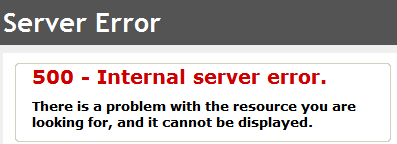

The "500 internal server error" is a common error. It is an HTTP status code that indicates something is wrong with the websites' server, and the server is not able to identify the problem. This error is not specific as it can occur for different reasons. It is a server-side error which means the problem is with the website's server, not with your PC, browser or Internet connection.

Image Alt text is a feature which is added to an image tag in HTML. It appears in the blank image box when the image is not displayed due to slow connection, broken URL or any other reason.

It provides information about the image to the search engines as they cannot see or interpret images. Thus, it enables you to optimize images or improve the SEO of your site.

Google analytics is a freemium web analytics service of Google that provides you the detailed statistics of the website traffic. It was introduced in November 2005 to track and report the website traffic. It offers you free tools to understand and analyze your customers and business in one place.

It mainly comprises statistics and basic analytical tools capable of monitoring the performance of your site. It tells you various important things about your website like your visitors, their activity, dwell time metrics, incoming keywords or search-terms, etc.

Thus, it helps you take the necessary steps to improve the SEO of your site and online marketing strategies. Anyone with a Google account can use this tool.

The reports generated by Google analytics can be divided into four different types of analysis which are as follows;

Audience Analysis: It gives you an overview of your visitors. Some of the key benefits of this analysis are as follows;

Acquisition Analysis: It helps you to identify the sources from where your website traffic comes. Some key benefits of this analysis are as follows;

Behavior Analysis: It helps you monitor users' behavior. It offers you the following benefits;

Conversion Analysis: Website conversion analysis is an important part of the SEO process. Every website has a particular goal such as to generate leads, to sell products or services, to increase targeted traffic. When the goal is achieved, it is known as conversion. Some major benefits of this analysis are as follow;

We can check which pages of a website are indexed by Google in two different ways:

PageRank is one of the important ranking factors that Google uses to rank the web pages on the basis of quality and quantity of links to the web pages. It determines a score of a webpage's importance and authority on a scale of 0 to 10. A webpage with more backlinks will have a higher PageRank than a webpage that has fewer backlinks. It was invented by Google's founders: Larry Page and Sergey Brin.

The Page rank of your page indicates the performance of your page. The page rank of a page depends on many factors such as quality of content, SEO, backlinks and more. So, to increase the page rank of a website you have to focus on multiple factors, e.g., you have to provide unique and original content, build more backlinks from authority sites and web pages with high page rank and more.

The Domain Authority is a metric introduced by Moz. It is designed to rank a website on a scale of 1-100. The score "1" is considered the worst and the score "100" is considered the best. The higher the score or DA, the higher will be the ability to rank on search engine result pages. So, it is an important factor that defines how well your website will rank in search engines.

A blog is an information on the website that is regularly updated. Blogs are usually written by an individual or a small group of people. It is written in an informal or conversational style.

It is like an online diary or a book located on a website. The content of a blog generally includes text, pictures, videos, etc. A blog may be written for personal use or sharing information with a specific group or to engage the public. Furthermore, the bloggers can set their blogs for private or public access.

Some of the most used social media channels are:

Blogging Platforms: Blogger, WordPress, Tumblr , Medium, Ghost, Squarespace, etc.

Social bookmarking sites: Digg, Jumptags, Delicious, Dribble, Pocket, Reddit, Slashdot, StumbleUpon, etc.,

Social networking sites: Facebook, WhatsApp, Instagram, LinkedIn, Twitter, Google+, Skype, Viber, Snapchat, Pinterest, Telegram, etc.

Video Sharing sites: YouTube, Vimeo, Netflix, Metacafe, Liveleak, Ustream, etc.

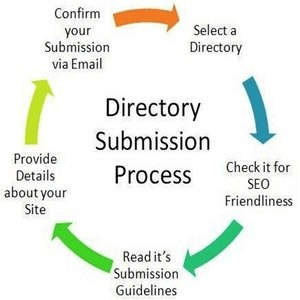

Directory submission is an off-page SEO technique that helps improve the SEO of your site. It allows you to submit your site to a specific category of a web directory, e.g., if your website talks about heath, you are supposed to submit your site in the health category of a web directory.

You are required to follow the guidelines of a web directory before submitting your site. Some of the popular directory submission sites are www.dmoz.org and www.seofriendly.com and www.directorylisting.com.

Submitting sites to a search directory is used to get backlinks to your website. It is one of the key methods to improve the SEO of a site. The more backlinks you get, the more is the probability that the search engine will index it sooner, compared to when it is not listed. Directory submission is mostly free.

You can also enter a site title, which is different from the URL, containing your keywords. In this way, you can generate anchor text for your site. Furthermore, most of the search directories are ranked higher in the search engine result pages so if you submit your site to such directories, there will be more chances of your website receiving high page rank.

Search engine submission is an off page SEO technique. In this technique, a website is directly submitted to the search engine for indexing and thus to increase its online recognition and visibility. It is an initial step to promote a website and get search engine traffic. A website can be submitted in two ways: one page at a time or the entire site at a time using a webmaster tool.

Press release submission is an off page SEO technique in which you write press releases and submit them to popular PR sites for building backlinks or to increase the online visibility of a website.

A press release generally contains information about events, new products or services of the company. It should be keyword-optimized, factual and informative so that it could engage the readers.

Forum posting is an off page SEO technique. It involves generating quality backlinks by participating in online discussion forums of forum websites. In a forum posting, you can post a new thread as well as reply to old threads in forums to get quality backlinks to your site. Some of the popular forum websites are message boards, discussion groups, discussion forums, bulletin boards and more. So, forum websites are online discussion sites that allow you to participate in online discussion and interact with new users to promote your websites, web pages and more.

RSS feed submission is an off page SEO technique. It refers to the submission of RSS feeds to RSS submission directory sites to improve the SEO of your site. RSS stands for Rich Site Summary and is also known as Really Simple Syndication.

An RSS feed generally contains updated web pages, videos, images, links and more. It is a format to deliver frequently changing web content. The users who find these updates interesting can subscribe your RSS feed to receive timely updates from their favorite websites. Thus, it helps increase traffic to your website.

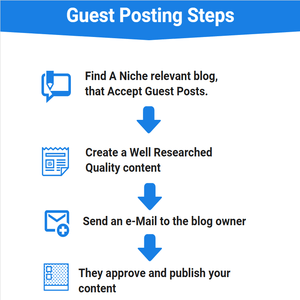

Guest posting is an off page SEO technique in which you publish your article or post on another person's website or blog. In other words, when you are writing a post for your blog, then your post is a simply a post, but when you write a post on someone else's blog, then your post becomes a guest post and you a guest writer. So, guest posting is a practice of contributing a post to someone else's blog to build authority and links. See the basic steps involved in guest posting:

Google algorithm is a set of rules, codes or commands that enables Google to return search results relevant to the queries made by the users. It is the Google's algorithm that allows it to rank the websites on SERPs on the basis of quality and relevancy. The websites with quality content and relevant information tend to remain at the top of the SERPs.

So, Google is a search engine that is based on a dynamic set of codes called algorithm to provide the most appropriate and relevant search results based on users' queries.

Google Panda is a Google algorithm update. It was primarily introduced in 2011 to reward high-quality websites and diminish the low-quality websites in SERPs. It was initially called "Farmer."

Panda addressed many issues in Google SERPs, such as:

Mobilegeddon is a search engine ranking algorithm introduced by Google on 21 April 2015. It was designed to promote mobile-friendly pages in Google's mobile search results. It ranks the websites based on their mobile friendliness, i.e., the mobile-friendly sites are ranked higher than the sites that are not mobile friendly. After this algorithm update, mobile friendliness has become an important factor in ranking the websites in the SERPs.

A Google penalty refers to the negative impact on the search rankings of a website. It can be automatic or manual, i.e., it may be due to an algorithm update or for using black hat SEO to improve the SEO of a site. If the penalty is manual, Google informs you about it through webmaster tool. However, if the penalty is automatic such as due to the algorithm you may not be informed. Google generally imposes the penalty in three different ways: Bans, Rank Demotion, and Temporary Rank Change.

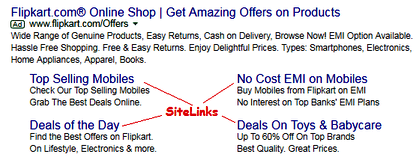

Google Sitelinks are little sub-listings that generally appear under some search results in a SERP. Google adds Sitelinks only if it thinks they are useful for the user otherwise it will not show any Sitelinks. It uses its automated algorithms to shortlist and display Sitelinks. The four links under flipkart.com, in the image given below, are known as "Sitelinks."

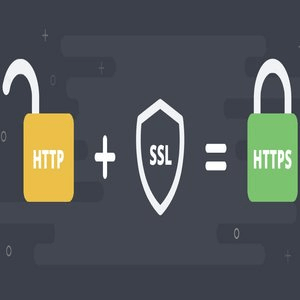

HTTPS, which stands for Hypertext Transfer Protocol Secure), is a protocol for secure communication on the World Wide Web. It uses SSL (Secure Sockets Layer) to add an extra layer of security to the standard HTTP connection, i.e., it is a secure version of HTTP. It encrypts all data or communication between the server and the browser.

The websites which use HTTP protocol, the data is transmitted between the site server and the browser as plain text, so anyone who intercepts your connection can read this data. Earlier, only the websites that handle sensitive data like credit card information were using it, but now almost all sites prefer HTTPS over HTTP. An HTTPS connection provides the following benefits:

Hidden text is one of the oldest black hat SEO techniques to improve the ranking of a site. The hidden text, which is also known as invisible or fake text, is the content that your visitors can't see but the search engine can read or see that content.

Using hidden text to improve the ranking of a webpage is against the guidelines of search engines. The search engines can detect the hidden text in a webpage and treats it as spam and can ban your site temporarily or permanently. So it should be avoided by the SEOs.

Keyword density refers to the percentage of occurrence of a keyword in a webpage out of all the words on that page. For example, if a keyword appears four times in an article of 100 words, the keyword density would be 4%. It is also known as keyword frequency as it talks about the frequency of occurrence of a keyword in a page. There is no ideal or exact keyword density for better ranking. However, the keyword density of 2 to 4 % is considered suitable for SEO.

Keyword stuffing refers to increasing the keyword density beyond a certain level to achieve higher ranking in the SERPs. As we know, web crawlers analyze keywords to index the web pages, so some SEO practitioners exploit this feature of the search engine by increasing the keywords in a page. This way of improving the ranking is against the guidelines of Google, so it is considered a black hat SEO technique, and it should be avoided.

It is a black hat SEO technique to improve the SEO of a website. In this technique, the SEO practitioners rewrite a single article to produce its multiple copies in such a way that each copy is treated as a new article. These articles have low quality, repetitive content. Such articles are frequently uploaded to the site to create the illusion of fresh articles.

Doorway pages, which are also known as gateway pages, portal pages or entry pages, are created exclusively to improve ranking in the SERPs. They do not contain quality content, relevant information and have a lot of keywords and links. They are created to funnel visitors into the actual, usable or relevant portion of your site. A doorway page acts as a door between the users and your main page. Black hat SEO professionals use doorway pages to improve the ranking of a website for specific search queries or keywords.

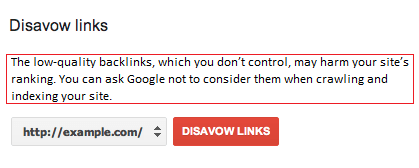

The Disavow tool is a part of Google Search Console that was introduced in October 2012. It enables you to discount the value of a backlink in order to prevent link-based penalties. It also protects the site from bad links that may harm the website's reputation.

Using this tool, you can tell Google that you don't want certain links to be considered to rank websites. Some sites who buy links may suffer the penalty if they don't get these links removed using Disavow tool. The low-quality backlinks, which you don't control, may harm your site's ranking. You can ask Google not to consider them when crawling and indexing your site.

Fetch as Google is a tool of Google available in the Google webmaster tool. It is used for immediate indexing and to find out the issues with your web pages and website. You can also use it to see how Google crawls or renders a URL on your site. Furthermore, if you found technical errors such as "404 not found" or "500 website is not available", you can simply submit your page or website for a fresh crawl using this tool.

Robot Meta tag is used to give instructions to the web spiders. It tells the search engine how to treat the content of a page. It is a piece of code which is incorporated in the "head" section of a webpage.

Some of the main robots Meta tag values or parameters are as follows:

The syntax of a Robots Meta tag is very simple:

In the syntax, you can add different values or parameters of robots meta tags as a placeholder as we have written: "instructions for the crawler" as a placeholder. Some commonly used values of robots meta tags include an index, follow, noindex, nofollow and more.

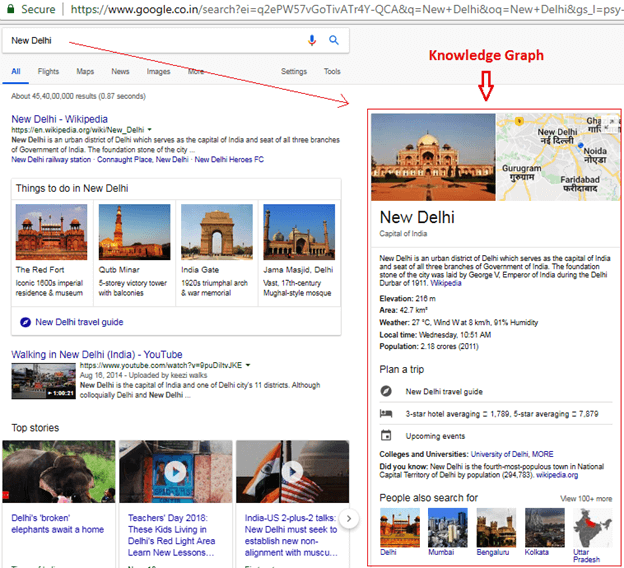

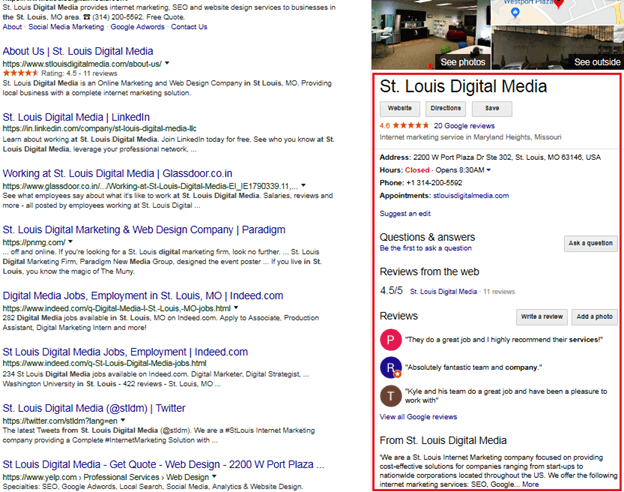

Google Knowledge graph refers to a block of information that appears on the right side of the SERPs after entering a search query. It is launched by Google in 2012 and is also known as Knowledge Graph Card.

It provides information systematically using images, content, graphs, etc. It creates interconnected search results to makes information more appealing, accurate, structured and relevant. So, it is an enhancement of the organic Google search results as it understands the facts about, people, places, objects, etc.

Google Sandbox is an imaginary area which contains new and less authoritative sites for a specified period until they cannot be displayed in the search results. It is an alleged filter for the new websites. In simple words, we can say that it places new websites on probation and ranks them lower than expected in searches. It may be caused by building too many links within a short period of time.

Any type of website can be placed in a sandbox. However, the new websites who want to rank for highly competitive keyword phrases are more prone to the sandbox. There is no fixed duration for a site to stay in the sandbox. Generally, a website can stay in the Sandbox for one to six months. The logic behind the sandbox is that new websites may be not as relevant as older sites.

Google My Business is a free tool of Google which is designed to help you create and manage their business listings on SERPs, i.e., to manage your online presence on Google. Using this tool, you can easily create and update your business listings such as you can:

An SEO audit refers to a process that evaluates the search engine friendliness of a website. It grades a site for its ability to appear in SERPs, i.e., it is like a report card for your site's SEO.

It helps you find issues with your site. You can resolve these issues to boost the ranking of your page or increase your sale. Furthermore, by doing SEO audit, you can do the following

Some SEO audit tools:

AMP, which stands for Accelerated Mobile Pages, is an open source project that helps publishers to improve the speed and readability of their pages on mobile devices. It makes mobile pages easily readable and loading quicker for better user experience. This project was introduced jointly by Google, WordPress, Adobe and some other companies in 2015.

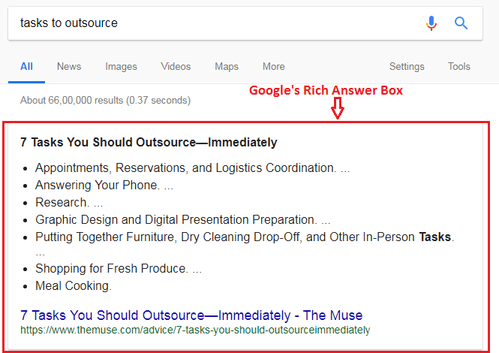

Google's rich Answer box is a short, rich featured snippet of relevant information that appears in the form of a box at the top of the SERPs. Google introduced this feature in 2015 to provide quick and easy answers to queries of users by featuring a snippet of information in a box at the top of the search engine result page. The rich answers may appear in different forms such as recipes, stock graphs, sports scores, etc. See the image given below:

There are plenty of ways to optimize your content or site for Google's rich answer box. Some of the commonly used methods are as follows:

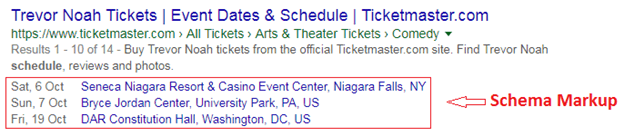

Schema markup, which is also known as structured data, is a code or microdata that you incorporate in your web pages to enable search engine better understand your pages and provide more relevant results to users. It helps search engines interpret and categorize information, which you want to highlight and to be presented as a rich snippet by the search engine.

The schema does not affect the crawling. It just changes the way the information is displayed and assigns a meaning to the content. So, it tells the search engine what your data means rather than just telling what it says.

CTR stands for Click through Rate. It is calculated by dividing the number of times a link appears on a search engine result page (impression) by the number of times it is clicked by users. For example, if you have 10 clicks and 100 impressions, your CTR would be 10%.

The higher the clicks, the higher will be the CTR. A high click-through rate is essential for a successful PPC, i.e., the success of a PPC depends on the CTR. Thus, it is an important metric in PPC ads which helps you gauge the results and tells how effective your campaigns are.

PPC stands for pay-per-click. It is a type of search engine marketing in which you have to pay a fee each time your advertisement is clicked by an online user. Search engines like Google, Bing, etc., offer pay-per-click advertising on auction basis where the highest bidder gets the most prominent advertising space on the SERPs so that it gets a maximum number of clicks.

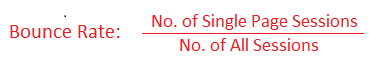

Bounce rate refers to the percentage of single-page visits in which the visitor views only one page of your website and then leaves the website from the landing page without browsing other pages. In simple words, it is the single-page sessions divided by all sessions. Google analytics tells the bounce rate of a web page or a website.

Bounce rate tells you how users are finding your site, e.g., if the bounce rate is too high, it indicates your site does not contain the relevant information, or the information is not useful for the visitors.

Alexa.com is a website and a subsidiary company of Amazon.com that provides a wide range of services out of which one is Alexa rank. This rank is a metric that ranks websites in a particular order on the basis of their popularity and website traffic in the last three months.

Alexa generally considers the unique daily visitors and average page views over a period of 3 months to calculate the Alexa rank for a website. Alexa rank is updated daily. The lower the Alexa rank, the more popular a site will be. An increase or decrease in the rank shows how your SEO campaigns are doing.

RankBrain is Google's machine-learning artificial intelligence system designed to help Google to process search results and deliver more relevant information to users. It is a part of the Google's Hummingbird search algorithm. It can learn and recognize new patterns and then revisit SERPs to provide more relevant information.

It has the ability to embed the written language into mathematical entities called vectors that Google can understand. For example, if it does not understand a sentence, it can guess its meaning with similar words or sentences and filter the information accordingly to provide accurate and relevant results to users.

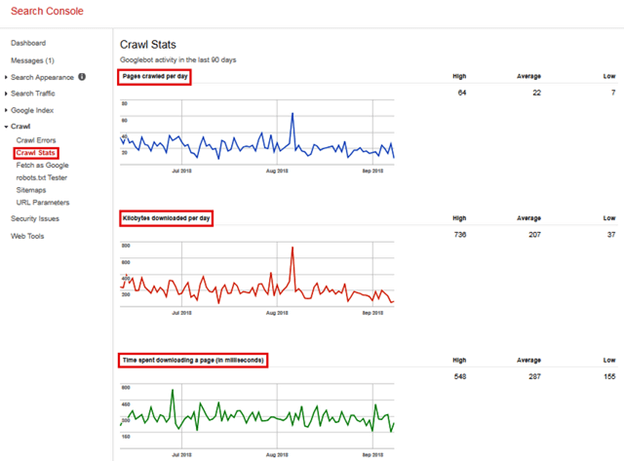

The crawl stats give the overview of Googlebot activity on our website. It provides information about the Googlebot's activity on your site for the last 90 days. The crawl number tends to increase as you increase the size of your site by adding more content or web pages.

The crawl stats typically provide the following information:

The referral traffic refers to the visitors that come to your site from the direct links on other websites rather than from the search engine. In simple words, the visits to your domain directly from other domains are called referral traffic. For example, a site that likes your page may post a link recommending your page. The visitor on this site may click on this link and visit your site.

You can also increase referral traffic by leaving links on other blogs, forums, etc. when you put a hyperlink of your page on other websites like forums users will click and visit your webpage. Google tracks such visits as referral visits or traffic. So, it is a Google's way of reporting visits that come to your site from sources outside of search engine.

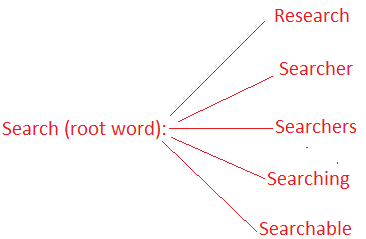

Keyword stemming is the process of finding the root word of a search query and then creating new keywords by adding prefixes, suffixes and pluralizing the root word. For example, a query "Searcher" can be broken down to the word "search" and then more words can be created by adding prefixes, suffixes or pluralizing this root word, such as research, searcher, searchers, searching, searchable, etc.

Similarly, you can add the prefix "en" to "large" to make it "enlarge" and add a suffix "ful" to "power" to make it "powerful." This practice allows you to expand your keyword list and thus helps get more traffic.

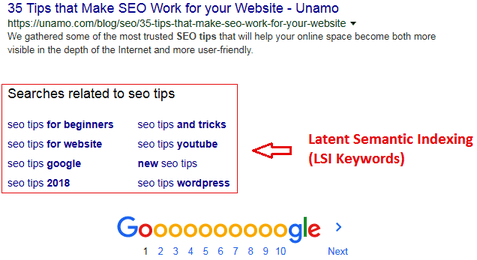

LSI stands for Latent Semantic Indexing. It is a part of the Google's algorithm which enables the search engine to understand the content of a page and the intent of the search queries. It identifies related words in content to better classify web pages and thus to deliver more relevant and accurate search results. It can understand synonyms and the relationship between words and thus can interpret web pages more deeply to provide relevant information to users. For example, if someone searches with a keyword "CAR," it will show related things such as car models, car auctions, car race, car companies and more. See the image:

Fri, 16 Jun 2023

Fri, 16 Jun 2023

Fri, 16 Jun 2023

Write a public review